Tesla FSD landed, how far is China players?

write an article /Tu Yanping

edit /Huangda Road

design /Zhao Yongshun

In May 2023, Musk revealed that Tesla FSD V12 would adopt an end-to-end solution. In March 2024, Tesla began to push V12 on a large scale.

According to the statistics of the third-party website FSD Tracker, after the update of Tesla FSD V12, compared with the previous version, the proportion of trips that users completely took over increased from 47% to 72%, and the average take-over mileage (MPI) increased from 116 miles to 333 miles.

On the Tesla FSD V12, the end-to-end autopilot technology has shown great strength.

With the development of AI and big model technology entering a new stage, end-to-end technology has become the focus of the autonomous driving industry. So, what is end to end? To what extent has its technology evolved?

In June 2024, Chentao Capital, Nanjing University Shanghai Alumni Association Autopilot Branch and Jiuzhang Zhijia jointly released the 2024 End-to-end Autopilot Industry Research Report (hereinafter referred to as the Report), which made an all-round analysis from the basic concepts, participants, development drivers, landing challenges and future prospects of end-to-end autopilot.

The Report systematically sorts out the concept of end-to-end autonomous driving technology, puts forward a set of terminology system for reference, and defines the basic concepts of end-to-end.

The Report pointed out that in the early days, the core definition of end-to-end was "a single neural network model from sensor input to control output". In recent years, the concept of end-to-end has been extended to a wider extent. According to the Report, the end-to-end core definition standard should be: lossless transmission of perceived information and global optimization of autonomous driving system.

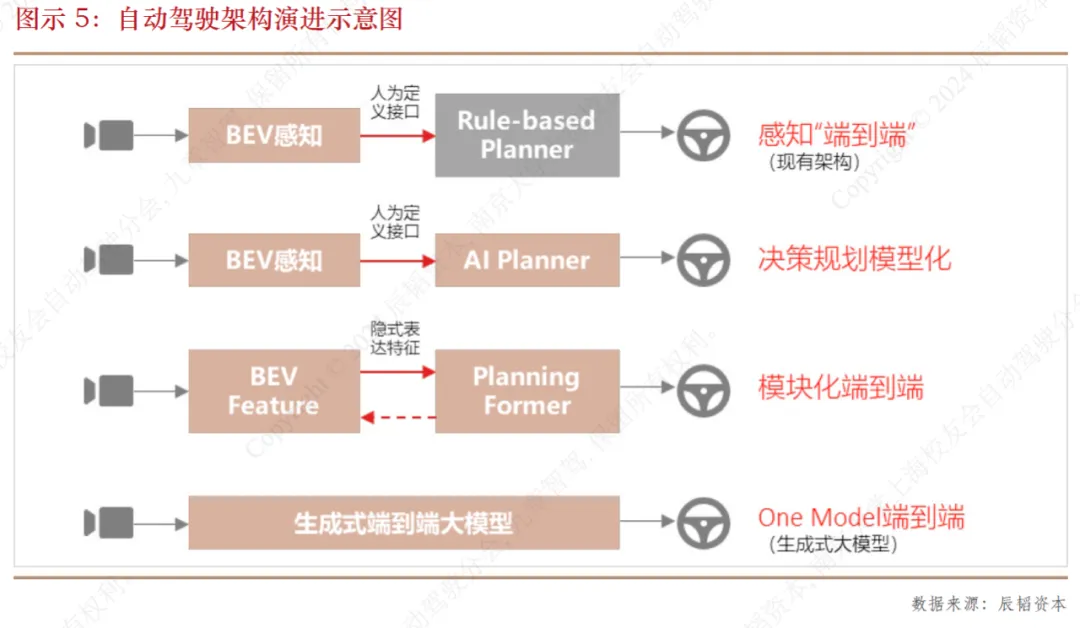

Based on this definition, combined with the application degree of AI in the autopilot system, the Report divides the autopilot technology architecture into four stages:

The first stage:Perceive "end to end". The sensing module realizes the "end-to-end" at the module level through BEV (Bird Eye View) technology based on multi-transmitter fusion.

The second stage:Modeling of decision planning. Functional modules from prediction to decision-making to planning have been integrated into the same neural network.

The third stage:Modular end to end. Perception module no longer outputs the results based on human understanding definition, but gives more feature vectors. The comprehensive model of predictive decision planning module outputs the results of motion planning based on feature vectors. The two modules cannot be trained independently, and they must be trained simultaneously by gradient conduction.

The fourth stage:One Model end to end. There is no clear division of functions such as perception and decision planning, and the same deep learning model is directly used from the input of the original signal to the output of the final planning trajectory.

Among them, the last two stages meet the above-mentioned end-to-end definition standards.

In addition, the report also distinguishes and analyzes the differences and connections between end-to-end and big model, world model, pure visual sensor scheme and other confusing concepts.

In April, 2016, NVIDIA team published a paper named End to End Learning for Self-driving Cars, which showed the end-to-end autopilot system DAVE-2 based on Convolutional Neural Network, CNN).

The system processes the camera image in front of the vehicle through CNN, and directly outputs the steering angle. During the training process, the model learns by simulating driving data. The Report calls it a pioneering work in the field of end-to-end autonomous driving in recent years.

At present, there are many players with end-to-end autonomous driving in the market. The Report divides them into several categories:

First, the OEM. Such as Tesla, Xpeng Motors, HarmonyOS Zhixing, Weilai and Zero One.

On May 20, 2024, Xpeng Motors held an AI Day conference to announce that the end-to-end large model was on the bus.

On April 24th, 2024, Huawei launched a new version of intelligent driving system-Gankun ADS 3.0, which realized the modeling of decision-making planning and laid the foundation for the continuous evolution of end-to-end architecture.

In addition, Weilai plans to go online in the first half of 2024 based on the end-to-end active safety function, and Zero One Automobile, a new energy heavy truck technology company, plans to realize the deployment of end-to-end autonomous driving by the end of 2024.

Second, autonomous driving algorithm and system company. Such as Yuanrong Qixing, Zhizhi Robot, Shang Tang Jueying and Xiaoma Zhixing.

Horizon proposed the end-to-end algorithm Sparse4D; for automatic driving perception in 2022; In 2023, UniAD, the first publicly published end-to-end autopilot model in the industry, was published by Horizon Scholar I, and won the best paper of CVPR 2023.

In August, 2023, Xiaoma Zhixing opened three traditional modules of perception, prediction and regulation, and unified them into an end-to-end autopilot model. At present, they have been simultaneously installed in L4 self-driving taxis and L2 auxiliary driving passenger cars.

On the eve of the 2024 Beijing Auto Show, NVIDIA revealed the development plan of the autonomous driving business, and mentioned that the second step of the plan was to "achieve a new breakthrough in L2++ system, and get on the LLM (large language model) and VLM (visual language model) models to realize end-to-end autonomous driving".

At the Beijing Auto Show in April, 2024, Yuanrong Qixing demonstrated the high-level intelligent driving platform DeepRoute IO and the end-to-end solution based on DeepRoute IO. Shang Tang Jueying introduced the end-to-end autopilot solution "UniAD" for mass production and the next generation autopilot technology, DriveAGI. The former belongs to the "modular end-to-end" type, and the latter is "One Model end-to-end".

During the 2024 Beijing Auto Show, Du Dalong, co-founder and CTO of Jianzhi Robot, revealed that GraphAD, the original self-driving end-to-end model of Jianzhi Robot, has been mass-produced and deployed, and is being jointly developed with head car companies.

Third, self-driving generative AI companies, such as Nimbus Intelligence and Nimbus Intelligence.

Founded in early 2023, Optical Wheel Intelligence has developed a self-developed full-link solution for end-to-end data and simulation. In September, 2023, Nimbus Intelligent launched DriveDreamer, a world model of autonomous driving, which can realize full-link closed-loop simulation of end-to-end autonomous driving, and can be extended to directly output end-to-end action instructions.

Fourthly, academic research institutions, such as Shanghai Artificial Intelligence Laboratory and Tsinghua University MARS Lab.

Since 2023, driven by Tesla’s benchmarking role, the AGI technology paradigm represented by the big model, and the anthropomorphization and safety requirements of autonomous driving, the attention of the autonomous driving industry to end-to-end has been heating up, and end-to-end has gradually become the consensus of the autonomous driving industry.

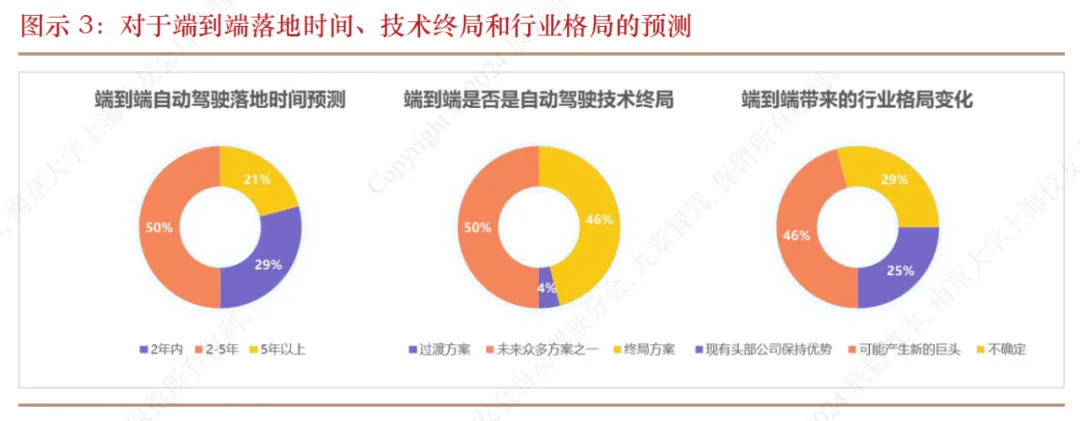

According to a survey of more than 30 front-line experts in the autonomous driving industry in the Report, 90% of the respondents indicated that the company they work for has invested in the research and development of end-to-end technology.

The Report analyzes the challenges faced by the end-to-end solution, including technical route, data and computing power requirements, testing and verification, and organizational resources investment.

First, differences in technical routes.

For example, "modular end-to-end" adopts the training paradigm of supervised learning, and "One Model end-to-end" may pay more attention to autoregressive and generative training paradigms, and both routes have company bets. According to the Report, in the next 1-2 years, as more companies and research institutions increase investment in the end-to-end field and launch products, the technical route will gradually converge.

Second, the demand for training data is unprecedented.

Under the end-to-end technology architecture, the importance of training data has been enhanced as never before. Among them, issues related to data quantity, data labeling, data quality and data distribution may all become challenges that limit end-to-end application. The Report suggests that synthesizing data and establishing a data sharing platform may be the solution.

Third, the demand for training computing power is getting higher and higher.

Most companies say that 100 large computing GPUs can support an end-to-end model training. And end-to-end into the mass production stage, the demand for training computing power has increased dramatically.

At the 2024Q1 earnings conference call, Tesla said that there are already 35,000 H100 GPUs, and plans to increase to more than 85,000 H 100 GPUs in 2024. Previously, it also deployed a larger A100 GPU training cluster.

In August, 2023, Tucki announced the establishment of the "Fuxuan" Intelligent Computing Center for Autopilot, with a computing power of 600PFLOPS (calculated from the FP32 computing power of NVIDIA A100 GPU, it is equivalent to about 30,000 A100 GPUs).

In addition, Shang Tang Big Device has laid out a nationwide integrated intelligent computing network, with 45,000 GPUs, with an overall computing power of 12000PFLOPS, which will reach 18000PFLOPS by the end of 2024.

According to the "Report", "Most companies that develop end-to-end autonomous driving are currently at the kilocalorie level. As the end-to-end gradually moves towards a large model, the training computing power will be stretched."

Fourth, the test and verification methods are not mature.

The existing test and verification methods are not suitable for end-to-end autonomous driving, and the industry urgently needs new test and verification methodology and tool chain.

Fifth, the challenge of organizational resource input.

End-to-end needs to reshape the organizational structure and tilt resources to the data side, which challenges the existing model.

In addition, some people think that the lack of computing power and interpretability are the limiting factors for end-to-end landing, and the Report puts forward the opposite conclusion.

The "Report" predicts that the mass production time of the modular end-to-end scheme of domestic autonomous driving companies may be in 2025, and the end-to-end landing time of One Model will be 1-2 years later than the modular end-to-end, from 2026 to 2027. Start mass production.

This will drive the upstream technological progress and the evolution of the market and industrial structure.

Technically, end-to-end landing will accelerate the progress of upstream tool chains and chips on which it depends; On the market side, the improvement of end-to-end autonomous driving experience will bring about an increase in the penetration rate of high-order assisted driving, and may also drive the application of autonomous driving across geographical regions, countries and scenes; In terms of industrial structure, end-to-end further enhances the importance of data and AI talents, which may lead to new industrial division of labor and business models.

In the early stage of development, autonomous driving draws lessons from the accumulation of many robot industries in perception algorithms, planning algorithms, middleware and sensors. In recent years, autonomous driving technology and industrial maturity have improved, among which the AGI technology paradigm provided by end-to-end autonomous driving has a feedback effect on the universal humanoid robot industry.

The "Report" believes that with the continuous progress of AI technology, the mutual integration and mutual reference of autonomous driving and robotics will be deeper, jointly promoting AGI (General Artificial Intelligence) to the physical world and creating greater social value.